Abstract

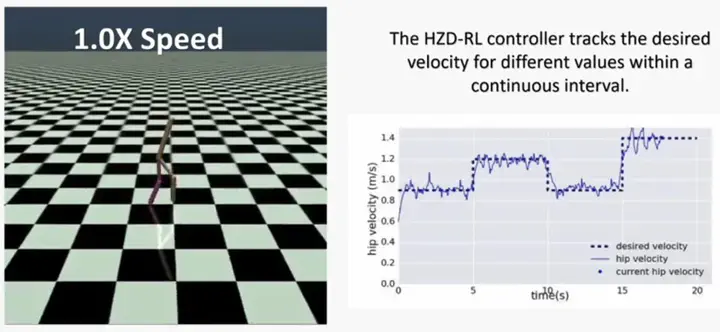

The design of feedback controllers for bipedal robots is challenging due to the hybrid nature of its dynamics and the complexity imposed by high-dimensional bipedal models. In this paper, we present a novel approach for the design of feedback controllers using Reinforcement Learning (RL) and Hybrid Zero Dynamics (HZD). Existing RL approaches for bipedal walking are inefficient as they do not consider the underlying physics, often requires substantial training, and the resulting controller may not be applicable to real robots. HZD is a powerful tool for bipedal control with local stability guarantees of the walking limit cycles. In this paper, we propose a non traditional RL structure that embeds the HZD framework into the policy learning. More specifically, we propose to use RL to find a control policy that maps from the robot’s reduced order states to a set of parameters that define the desired trajectories for the robot’s joints through the virtual constraints. Then, these trajectories are tracked using an adaptive PD controller. The method results in a stable and robust control policy that is able to track variable speed within a continuous interval. Robustness of the policy is evaluated by applying external forces to the torso of the robot. The proposed RL framework is implemented and demonstrated in OpenAI Gym with the MuJoCo physics engine based on the well-known RABBIT robot model.